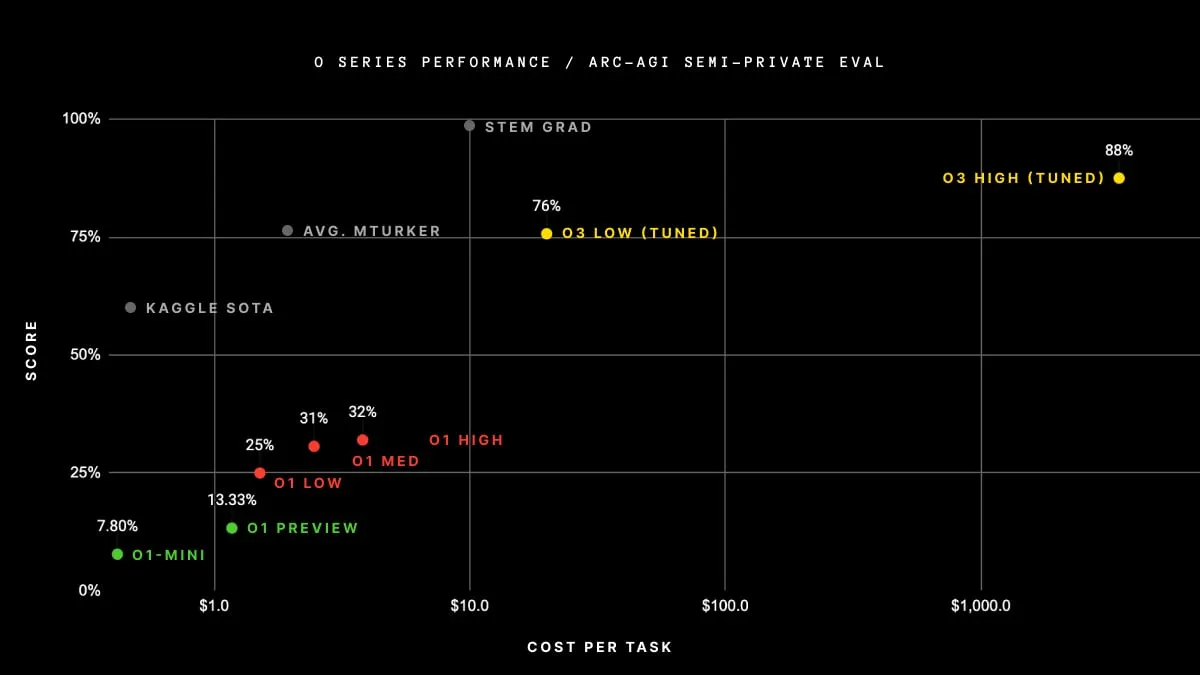

OpenAI's latest family of AI models has achieved what many thought was impossible, scoring an unprecedented 87.5% on the so-called Collaborative Artificial General Intelligence Benchmark for Autonomous Research — essentially close to the lower bound of what could theoretically be considered "human."

The ARC-AGI standard tests how close a model is to achieving artificial general intelligence, meaning whether it can think, solve problems and adapt like a human in different situations... even when it hasn't been trained in them. This criterion is very easy for humans to overcome, but very difficult for machines to understand and solve.

The San Francisco-based AI research company unveiled the o3 and o3-mini last week as part of its “12 Days of OpenAI” campaign — and just days after Google announced its competitor, the o1. The release showed that the upcoming OpenAI model was closer to reaching AGI than expected.

The new inference-focused OpenAI paradigm represents a fundamental shift in how AI systems handle complex inference. Unlike traditional large language models that rely on pattern matching, o3 offers a new approach to “program synthesis” that allows it to address completely new problems that it has never encountered before.

“This is not just an incremental improvement, but a real achievement,” the ARC team said in its evaluation report. In a blog post, François Cholet, co-founder of the ARC Prize, went further, noting that “o3 is a system capable of adapting to tasks it has never encountered before, and is arguably approaching human-level performance in the ARC-AGI field.” "

Just for reference, here's the ARC award He says About its results: “The average human performance in the study was between 73.3% and 77.2% correct (general training group average: 76.2%; global evaluation group average: 64.2%).”

OpenAI o3 has set a record Score 88.5% Using high computer equipment. This result was ahead of any other AI model currently available.

Is o3 AGI? It depends on who you ask

Despite its impressive results, the ARC Prize Board – and other experts – have said that AGI has not yet been achieved, so the $1 million prize remains unclaimed. But experts in the AI industry were not unanimous in their opinions on whether o3 violated the Artificial General Intelligence (AGI) standard.

Some - including Chollet himself - have disputed whether benchmark testing itself is the best measure of whether a model is approaching a real solution to human-level problems: “Passing ARC-AGI does not mean achieving artificial general intelligence, and as it is a general intelligence test,” Chollet said. “As a matter of fact, I don't think o3 is an AGI yet.” “O3 still fails at some very easy tasks, indicating fundamental differences with human intelligence.”

He pointed to a newer version of the Artificial General Intelligence (AGI) standard, which he said would provide a more precise measure of how close AI is to being able to think like a human. "Early data points suggest that the upcoming ARC-AGI-2 benchmark will still pose a significant challenge to o3, potentially reducing its score to less than 30% even in high-end computing (while intelligent humans will still be able to score more," Cholet noted. From 30%"). 95% without training).”

Other skeptics even claimed that OpenAI effectively passed the test. “Models like o3 use layout tricks. They define steps (“scratchboards”) to improve accuracy, but they are still advanced text predictions. For example, when o3 “counts letters,” it generates text about counting, not real reasoning,” Zeroqode co-founder Levon Terterian wrote on X.

Why is OpenAI's O3 not AGI?

OpenAI's new inference model, o3, is impressive by standards but still a long way from general artificial intelligence.

What is artificial general intelligence?

AGI (Artificial General Intelligence) refers to a system capable of human-level understanding across tasks. Should:

- Play chess like a human. pic.twitter.com/yn4cuDTFte- Levon Terterian (@Levon377) December 21, 2024

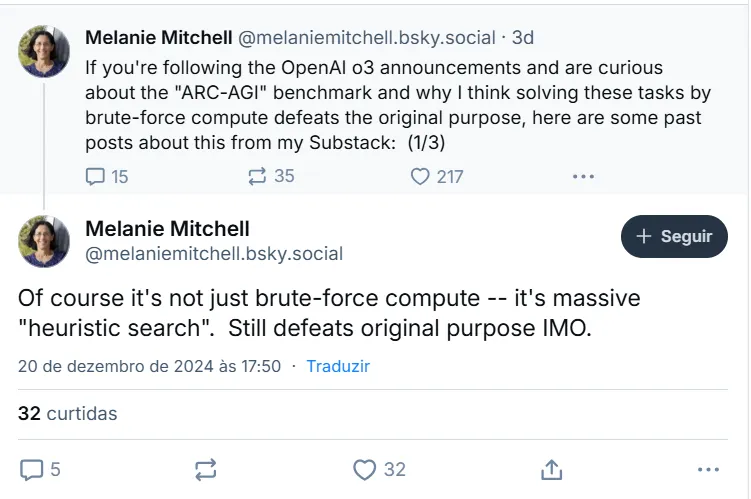

Other AI scientists, such as award-winning AI researcher, share a similar view Melanie Mitchellwho argued that o3 does not really think but performs.”Heuristic search".

Cholet and others pointed out that OpenAI has not been transparent about how its models work. The models appear to have been trained on different chain-of-thought operations “in a way that is probably not too different from AlphaZero's approach to Monte Carlo tree searching,” Mitchell said. In other words, she does not know how to solve a new problem, and instead applies the train of potential ideas to her extensive body of knowledge until she successfully finds a solution.

In other words, o3 isn't really creative - it simply relies on a huge library of trial and error on its way to a solution.

“Brute force[does not equal]intelligence. o3 relied on extreme computing power to reach its unofficial conclusion,” said Jeff Joyce, host of the Humanity Unchained AI podcast. LinkedIn. “True artificial general intelligence needs to solve problems efficiently. Even with unlimited resources, o3 has not been able to solve more than 100 puzzles that humans find easy.

OpenAI researcher Vahidi Kazemi is in the “This is AGI” camp. “In my opinion, we have already achieved artificial general intelligence,” he said, pointing to the previous o1 model, which he said was the first model designed to reason rather than just predict the next code.

He draws an analogy with scientific methodology, arguing that since science itself relies on systematic, repetitive steps to validate hypotheses, it is inconsistent to dismiss AI models as non-AGI simply because they follow a set of pre-defined instructions. However, OpenAI “did not perform better than any human at any task.” books.

In my opinion, we have already achieved Artificial General Intelligence (AGI) and it is even clearer with O1. We have not achieved “better than any human at any task” but what we have is “better than most humans at most tasks.” Some say that an MBA only knows how to follow a recipe. First, no one can really explain...

- Wahid Kazemi (@VahidK) December 6, 2024

For his part, Sam Altman, CEO of OpenAI, has not taken a position on whether AGI has been achieved. He simply said that “the o3 is an incredibly smart model,” and “the o3 mini is an incredibly smart model but with really good performance and cost.”

Intelligence may not be enough to claim that AGI has been achieved, at least not yet. But stay tuned, he added: “We see this as the beginning of the next phase of artificial intelligence.”

Modified by Andrew Hayward

Smart in general Newsletter

A weekly AI journey narrated by Jane, a generative AI model.

Source link